《I want to talk about WebGPU》翻译

中文标题:《我想谈谈 WebGPU》,原文地址

WebGPU is the new WebGL.

WebGPU 是新的 WebGL。

That means it is the new way to draw 3D in web browsers.

这意味着它是在网络浏览器中绘制 3D 的新方法。

It is, in my opinion, very good actually.

在我看来,这实际上非常好。

It is so good I think it will also replace Canvas and become the new way to draw 2D in web browsers.

它太好了,我认为它也将取代 Canvas 并成为在 Web 浏览器中绘制 2D 的新方式。

In fact it is so good I think it will replace Vulkan as well as normal OpenGL, and become just the standard way to draw, in any kind of software, from any programming language.

事实上,它非常好,我认为它将取代 Vulkan 以及普通的 OpenGL,并成为在任何类型的软件中使用任何编程语言进行绘图的标准方式。

This is pretty exciting to me. WebGPU is a little bit irritating— but only a little bit, and it is massively less irritating than any of the things it replaces.

这让我很兴奋。 WebGPU 有点让人恼火——但只是一点点,而且它比它所取代的任何东西都要小得多。

WebGPU goes live… today, actually.

WebGPU 上线……实际上是今天。

Chrome 113 shipped in the final minutes of me finishing this post and should be available in the "About Chrome" dialog right this second.

Chrome 113 是在我完成这篇文章的最后几分钟发布的,现在应该可以在“关于 Chrome”对话框中使用。

If you click here, and you see a rainbow triangle, your web browser has WebGPU.

如果你点击这里,你看到一个彩虹三角形,你的网络浏览器有 WebGPU。

By the end of the year WebGPU will be everywhere, in every browser.

到今年年底,WebGPU 将无处不在,出现在每个浏览器中。

(All of this refers to desktop computers. On phones, it won't be in Chrome until later this year; and Apple I don't know. Maybe one additional year after that.)

(所有这些都是指台式电脑。在手机上,它要到今年晚些时候才会出现在 Chrome 中;而苹果我不知道。也许再过一年。)

If you are not a programmer, this probably doesn't affect you.

如果您不是程序员,这可能不会影响您。

It might get us closer to a world where you can just play games in your web browser as a normal thing like you used to be able to with Flash.

它可能会让我们更接近一个世界,在这个世界中,您可以像以前使用 Flash 一样在网络浏览器中正常玩游戏。

But probably not because WebGL wasn't the only problem there.

但这可能并不是因为 WebGL 是唯一的问题。

If you are a programmer, let me tell you what I think this means for you.

如果你是一名程序员,让我告诉你我认为这对你意味着什么。

Sections below:

- A history of graphics APIs (You can skip this)

- 图形API的历史(您可以跳过这部分)

- What's it like? How do I use it?

- 它是什么样子的?我该如何使用它?

- Typescript / NPM world

- TypeScript / NPM世界

- I don't know what a NPM is I Just wanna write CSS and my stupid little script tags

- 我不知道什么是NPM,我只想写CSS和我的一些简单脚本标签

- Rust Clap / C++ / Posthuman Intersecting Tetrahedron

- Rust / C++ / 后人类相交四面体

A history of graphics APIs (You can skip this)

图形API 的历史(你可以跳过这个)。

Back in the dawn of time there were two ways to make 3D on a computer: You did a bunch of math; or you bought an SGI machine.

回到过去,有两种在计算机上制作 3D 的方法:你做一堆数学;或者你买了一台 SGI 机器。

SGI were the first people who were designing circuitry to do the rendering parts of a 3D engine for you.

SGI 是第一批设计电路来为您完成 3D 引擎渲染部分的人。

They had this C API for describing your 3D models to the hardware.

他们有这个 C API 用于向硬件描述您的 3D 模型。

At some point it became clear that people were going to start making plugin cards for regular desktop computers that could do the same acceleration as SGI's big UNIX boxes, so SGI released a public version of their API so it would be possible to write code that would work both on the UNIX boxes and on the hypothetical future PC cards.

在某些时候,很明显人们将开始为普通台式计算机制作插件卡,这些插件卡可以像 SGI 的大型 UNIX 机器一样加速,所以 SGI 发布了他们的 API 的公共版本,这样就可以编写代码既可以在 UNIX 机器上工作,也可以在假设的未来 PC 卡上工作。

This was OpenGL. color() and rectf() in IRIS GL became glColor() and glRectf() in OpenGL.

这是 OpenGL。 IRIS GL 中的 color() 和 rectf() 变成了 OpenGL 中的 glColor() 和 glRectf()。

When the PC 3D cards actually became a real thing you could buy, things got real messy for a bit.

当 PC 3D 卡真正成为你可以买到的东西时,事情变得有点混乱。

Instead of signing on with OpenGL Microsoft had decided to develop their own thing (Direct3D) and some of the 3D card vendors also developed their own API standards, so for a while certain games were only accelerated on certain graphics cards and people writing games had to write their 3D pipelines like four times, once as a software renderer and a separate one for each card type they wanted to support.

微软没有与 OpenGL 签约,而是决定开发自己的东西 (Direct3D),一些 3D 卡供应商也开发了自己的 API 标准,所以有一段时间某些游戏只能在某些显卡上加速,而编写游戏的人不得不他们的 3D 管线写了四次,一次作为软件渲染器,另一次用于他们想要支持的每种卡类型。

My perception is it was Direct3D, not OpenGL, which eventually managed to wrangle all of this into a standard, which really sucked if you were using a non-Microsoft OS at the time.

我的印象是,最终将所有这些东西整合为标准的是Direct3D,而不是OpenGL。如果你当时使用的是非Microsoft操作系统,那么这真的很糟糕。

It really seemed like DirectX (and the "X Box" standalone console it spawned) were an attempt to lock game companies into Microsoft OSes by getting them to wire Microsoft exclusivity into their code at the lowest level, and for a while it really worked.

DirectX(以及它衍生出的“X Box”独立游戏机)似乎是试图通过让游戏公司在最低层次上将 Microsoft 独占性编码到他们的代码中来将游戏公司限制在 Microsoft 操作系统中,并且一段时间内它确实起到了作用。

It is the case though it wasn't very long into the Direct3D lifecycle before you started hearing from Direct3D users that it was much, much nicer to use than OpenGL, and OpenGL quickly got to a point where it was literally years behind Direct3D in terms of implementing critical early features like shaders, because the Architecture Review Board of card vendors that defined OpenGL would spend forever bickering over details whereas Microsoft could just implement stuff and expect the card vendor to work it out.

虽然如此,但在 Direct3D 的生命周期中不久就开始听到 Direct3D 用户说,它比 OpenGL 好用得多得多,而 OpenGL 很快就到了一个点,它在实现关键的早期功能(如着色器)方面比Direct3D落后了数年,因为定义OpenGL的卡片供应商架构审查委员会会花费很长时间争论细节,而Microsoft可以直接实现功能并期望卡片供应商来解决问题。

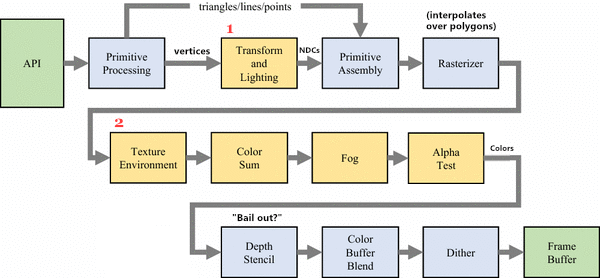

Let's talk about shaders. The original OpenGL was a "fixed function renderer", meaning someone had written down the steps in a 3D renderer and it performed those steps in order.

让我们来谈谈着色器。最初的 OpenGL 是一个“固定功能渲染器”,意味着某个人已经把3D渲染器中的步骤写下来,然后按顺序执行这些步骤。

Modified Khronos Group image

修改后的Khronos Group图像

Each box in the "pipeline" had some dials on the side so you could configure how each feature behaved, but you were pretty much limited to the features the card vendor gave you.

“流水线”中的每个框都有一些旁边的拨动开关,因此您可以配置每个功能的行为,但您几乎只能使用卡片供应商提供的功能。

If you had shadows, or fog, it was because OpenGL or an extension had exposed a feature for drawing shadows or fog.

如果您有阴影或雾,那是因为 OpenGL 或扩展已经公开了绘制阴影或雾的功能。

What if you want some other feature the ARB didn't think of, or want to do shadows or fog in a unique way that makes your game look different from other games?

如果您想要ARB没有考虑的其他功能,或者想以独特的方式进行阴影或雾处理,以使您的游戏与其他游戏不同,那该怎么办呢?

Sucks to be you. This was obnoxious, so eventually "programmable shaders" were introduced.

那就太糟糕了。这很讨厌,所以最终引入了“可编程着色器”。

Notice some of the boxes above are yellow? Those boxes became replaceable.

注意上面的一些框是黄色的吗?这些框变得可替换了。

The (1) boxes got collapsed into the "Vertex Shader", and the (2) boxes became the "Fragment Shader"².

(1) 框被合并为“顶点着色器”,(2) 框变成了“片段着色器”²。

The software would upload a computer program in a simple C-like language (upload the actual text of the program, you weren't expected to compile it like a normal program)³ into the video driver at runtime, and the driver would convert that into configurations of ALUs (or whatever the card was actually doing on the inside) and your program would become that chunk of the pipeline.

软件会在运行时将一种简单的类C语言的计算机程序上传到视频驱动程序中(上传实际文本程序,您不需要像普通程序一样编译它)³,驱动程序会将其转换为ALU的配置(或卡片在内部实际执行的任何内容),您的程序将成为该管道块。

This opened things up a lot, but more importantly it set card design on a kinda strange path.

这大大开放了事情,但更重要的是它将卡片设计置于一种有点奇怪的道路上。

Suddenly video cards weren't specialized rendering tools anymore. They ran software.

突然间,视频卡不再是专门的渲染工具了。它们运行软件。

Pretty shortly after this was another change. Handheld devices were starting to get to the point it made sense to do 3D rendering on them (or at least, to do 2D compositing using 3D video card hardware like desktop machines had started doing).

不久之后,手持设备逐渐变得足够强大,可以在上面进行3D渲染(或者至少,使用类似桌面机器上使用的3D显卡硬件进行2D合成)。

DirectX was never in the running for these applications.

DirectX 从未在这些应用中得到过青睐。

But implementing OpenGL on mid-00s mobile silicon was rough.

但在mid-00年代的移动芯片上实现OpenGL很困难。

OpenGL was kind of… large, at this point.

此时的 OpenGL 已经变得有些庞大。

It had all these leftover functions from the SGI IRIX era, and then it had this new shiny OpenGL 2.0 way of doing things with the shaders and everything and not only did this mean you basically had two unrelated APIs sitting side by side in the same API, but also a lot of the OpenGL 1.x features were traps.

它遗留了许多SGI IRIX时代的函数,然后有了这个新的、闪亮的OpenGL 2.0的做事方式,这意味着你基本上在同一个API中拥有两个不相关的API,而且OpenGL 1.x的许多功能都是陷阱。

The spec said that every video card had to support every OpenGL feature, but it didn't say it had to support them in Hardware, so there were certain early-90s features that 00s card vendors had decided nobody really uses, and so if you used those features the driver would render the screen, copy the entire screen into regular RAM, perform the feature on the CPU and then copy the results back to the video card.

规范规定每个显卡都必须支持每个OpenGL功能,但并没有规定必须在硬件上支持它们,因此00年代的显卡厂商已经决定没有人真正使用某些早期的90年代的功能,所以如果你使用了这些功能,驱动程序将渲染屏幕,将整个屏幕复制到常规RAM中,在CPU上执行该功能,然后将结果复制回显卡。

Accidentally activating one of these trap features could easily move you from 60 FPS to 1 FPS.

意外地激活其中一个陷阱功能很容易将帧速从60帧降至1帧。

All this legacy baggage promised a lot of extra work for the manufacturers of the new mobile GPUs, so to make it easier Khronos (which is what the ARB had become by this point) introduced an OpenGL "ES", which stripped out everything except the features you absolutely needed.

所有这些传统的包袱为新的移动 GPU 制造商带来了许多额外的工作,因此为了简化这些工作,Khronos(这是ARB此时变成的组织)引入了OpenGL ES ,除了绝对需要的功能之外,剥离了一切。

Instead of being able to call a function for each polygon or each vertex you had to use the newer API of giving OpenGL a list of coordinates in a block in memory⁴, you had to use either the fixed function or the shader pipeline with no mixing (depending on whether you were using ES 1.x or ES 2.x), etc.

与其为每个多边形或每个顶点调用一个函数,你必须使用新的API,在内存块中为OpenGL提供一系列坐标,你必须使用固定的功能或者着色器管线(取决于你是否使用ES 1.x或ES 2.x),等等。

This partially made things simpler for programmers, and partially prompted some annoying rewrites.

这在某种程度上简化了程序员的工作,但也促使了一些恼人的重写。

But as with shaders, what's most important is the long-term strange-ing this change presaged: Starting at this point, the decisions of Khronos increasingly were driven entirely by the needs and wants of hardware manufacturers, not programmers.

但正如着色器一样,最重要的是这种变化预示着长期的陌生化:从这时开始,Khronos 的决策越来越多地受到硬件制造商的需求和要求的驱动,而不是程序员的需求和要求。

With OpenGL ES devices in the world, OpenGL started to graduate from being "that other graphics API that exists, I guess" and actually take off.

随着 OpenGL ES 设备的问世,OpenGL 开始逐渐从“我猜存在的另一个图形API”逐渐脱颖而出,开始真正起飞。

The iPhone, which used OpenGL ES, gave a solid mass-market reason to learn and use OpenGL.

使用 OpenGL ES 的 iPhone 给人们提供了一个坚实的大众市场理由去学习和使用 OpenGL。

Nintendo consoles started to use OpenGL or something like it.

任天堂的游戏机也开始使用OpenGL或类似的技术。

OpenGL had more or less caught up with DirectX in features, especially if you were willing to use extensions.

OpenGL 在特性上或多或少已经追赶了 DirectX,特别是如果你愿意使用扩展。

Browser vendors, in that spurt of weird hubris that gave us the original WebAudio API, adapted OpenGL ES into JavaScript as "WebGL", which makes no sense because as mentioned OpenGL ES was all about packing bytes into arrays full of geometry and JavaScript doesn't have direct memory access or even integers, but they added packed binary arrays to the language and did it anyway.

在那段怪异的自负时期中,浏览器厂商将 OpenGL ES 适配到 JavaScript 中作为“WebGL”,这是毫无意义的,因为正如前面提到的,OpenGL ES 主要是将字节打包成几何形状的数组,而 JavaScript 没有直接的内存访问甚至整数,但他们在语言中添加了打包的二进制数组,仍然这么做了。

So with all this activity, sounds like things are going great, right?

所以在这一切的活动中,听起来一切都很顺利,对吧?

No! Everything was terrible!

不!一切都很糟糕!

As it matured, OpenGL fractured into a variety of slightly different standards with varying degrees of cross-compatibility.

随着时间推移,OpenGL变得越来越分裂,产生了一系列略有不同的标准和不同程度的交叉兼容性。

OpenGL ES 2.0 was the same as OpenGL 3.3, somehow.

OpenGL ES 2.0 和 OpenGL 3.3 竟然是一样的。

WebGL 2.0 is very almost OpenGL ES 3.0 but not quite.

WebGL 2.0 非常接近 OpenGL ES 3.0,但又不完全相同。

Every attempt to resolve OpenGL's remaining early mistakes seemed to wind up duplicating the entire API as new functions with slightly different names and slightly different signatures.

每次尝试解决 OpenGL 早期的错误都似乎会重复整个 API,并使用稍微不同的名称和签名的新函数。

A big usability issue with OpenGL was even after the 2.0 rework it had a lot of shared global state, but the add-on systems that were supposed to resolve this (VAOs and VBOs) only wound up being even more global state you had to keep track of.

OpenGL 的一个重大可用性问题是,即使在2.0重新设计后,它仍具有许多共享全局状态,但用于解决此问题的附加系统(VAO 和 VBO)只会导致更多的全局状态需要跟踪。

A big trend in the 10s was "GPGPU" (General Purpose GPU);

在10年代的一个大趋势是“通用GPU”(GPGPU);

programmers started to realize that graphics cards worked as well as, but were slightly easier to program than, a CPU's vector units, so they just started accelerating random non-graphics programs by doing horrible hacks like stuffing them in pixel shaders and reading back a texture containing an encoded result.

程序员开始意识到,与CPU的向量单元相比,图形卡同样有效,但编程稍微容易一些,因此他们开始通过执行可怕的黑客攻击,如将程序放入像素着色器中并读回包含编码结果的纹理,来加速随机的非图形程序。

Before finally resolving on compute shaders (in other words: before giving up and copying DirectX's solution), Khronos's original steps toward actually catering to this were either poorly adopted (OpenCL) or just plain bad ideas (geometry shaders).

在最终解决计算着色器之前(换句话说:在放弃并复制 DirectX 的解决方案之前),Khronos 早期的努力实际上是不成功的(OpenCL),或者只是坏主意(几何着色器)。

It all built up. Just like in the pre-ES era, OpenGL had basically become several unrelated APIs sitting in the same header file, some of which only worked on some machines.

所有这些都累积了起来。就像在ES之前的时代一样,OpenGL基本上已经成为同一头文件中的几个不相关的API,其中一些仅适用于某些机器。

Worse, nothing worked quite as well as you wanted it to;

更糟糕的是,没有什么东西完全符合您的期望;

different video card vendors botched the complexity, implementing features slightly differently (especially tragically, implementing slightly different versions of the shader language) or just badly, especially in the infamously bad Windows OpenGL drivers.

不同的视频卡供应商会以稍微不同的方式搞砸复杂性,实现功能时略有不同(特别是可悲的是,实现稍微不同的着色器语言的不同版本)或者只是糟糕,尤其是在声名狼藉的 Windows OpenGL 驱动程序中。

The way out came from, this is how I see it anyway, a short-lived idea called "AZDO", which technically consisted of a single GDC talk⁵, but the idea the talk put name to is the underlying idea that spawned Vulkan, DirectX 12, and Metal.

这个看起来短命的概念“AZDO”解决了问题,这个概念本质上由一次GDC演讲⁵命名,但演讲所提到的想法是 Vulkan、DirectX 12 和 Metal 的基本思想。

"Approaching Zero Driver Overhead". Here is the idea: By 2015 video cards had pretty much standardized on a particular way of working and that way was known and that way wasn't expected to change for ten years at least.

“逼近零驱动开销”的思想是:到2015年,视频卡几乎已经标准化了一种工作方式,这种方式已知,而且至少在未来十年内不会发生改变。

Graphics APIs were originally designed around the functionality they exposed, but that functionality hadn't been a 1:1 map to how GPUs look on the inside for ten years at least.

图形API最初是围绕其所公开的功能设计的,但这些功能与GPU内部的工作方式已经至少有10年的时间不是1:1的映射关系了。

Drivers had become complex beasts that rather than just doing what you told them tried to intuit what you were trying to do and then do that in the most optimized way, but often they guessed wrong, leaving software authors in the ugly position of trying to intuit what the driver would intuit in any one scenario.

驱动程序已经成为复杂的野兽,而不是只执行你告诉它的操作,而是试图猜测你试图做什么,然后以最优化的方式进行操作,但通常它们猜错了,导致软件作者处于试图猜测驱动程序在任何一个场景下会猜测什么的尴尬境地。

AZDO was about threading your way through the needle of the graphics API in such a way your function calls happened to align precisely with what the hardware was actually doing, such that the driver had nothing to do and stuff just happened.

AZDO 是关于通过以一种方式穿越图形 API,使得你的函数调用恰好与硬件实际进行的操作相一致,从而驱动程序不需要做任何事情,一切都可以自动完成。

Or we could just design the graphics API to be AZDO from the start. That's Vulkan. (And DirectX 12, and Metal.)

或者我们可以从一开始就设计一个 AZDO 图形 API。那就是 Vulkan(还有 DirectX 12 和 Metal)。

The modern generation of graphics APIs are about basically throwing out the driver, or rather, letting your program be the driver.

现代图形 API 的基本思想是扔掉驱动程序,或者说让你的程序成为驱动程序。

The API primitives map directly to GPU internal functionality⁶, and the GPU does what you ask without second guessing.

API 原语直接映射到 GPU 内部功能,GPU 按照你的要求执行而没有二话。

This gives you an incredible amount of power and control.

这给你带来了极大的能力和控制。

Remember that "pipeline" diagram up top?

还记得上面的“管道”图吗?

The modern APIs let you define "pipeline objects"; while graphics shaders let you replace boxes within the diagram, and compute shaders let you replace the diagram with one big shader program, pipeline objects let you draw your own diagram.

现代 API 让你定义“管道对象”;虽然图形着色器让你替换图中的方块,计算着色器让你用一个大的着色器程序替换整个图,但管道对象让你自己画出图。

You decide what blocks of GPU memory are the sources, and which are the destinations, and how they are interpreted, and what the GPU does with them, and what shaders get called.

你决定哪些 GPU 内存块是源,哪些是目的地,如何解释它们,GPU 如何处理它们,调用哪些着色器。

All the old sources of confusion get resolved.

所有旧的混淆源都得到解决。

State is bound up in neatly defined objects instead of being global.

状态捆绑在定义良好的对象中,而不是全局的。

Card vendors always designed their shader compilers different, so we'll replace the textual shader language with a bytecode format that's unambiguous to implement and easier to write compilers for.

卡片供应商总是以不同的方式设计其着色器编译器,所以我们将文本着色器语言替换为字节码格式,这是明确实现和更易于编写编译器的格式。

Vulkan goes so far as to allow⁷ you to write your own allocator/deallocator for GPU memory.

Vulkan 更进一步,允许你为 GPU 内存编写自己的分配器/释放器。

So this is all very cool.

这一切都很酷。

There is only one problem, which is that with all this fine-grained complexity, Vulkan winds up being basically impossible for humans to write.

只有一个问题,那就是在所有这些细粒度的复杂性中,Vulkan 最终变得对人类来说基本上是不可能写的。

Actually, that's not really fair.

实际上,这不太公平。

DX12 and Metal offer more or less the same degree of fine-grained complexity, and by all accounts they're not so bad to write.

DX12 和 Metal 提供了基本相同程度的细粒度复杂性,据所有报告,它们写起来并不那么困难。

The actual problem is that Vulkan is not designed for humans to write.

问题实际上是,Vulkan 不是为人类编写而设计的。

Literally. Khronos does not want you to write Vulkan, or rather, they don't want you to write it directly.

字面上来说,Khronos 不希望你直接编写 Vulkan,或者更确切地说,他们不希望你直接编写它。

I was in the room when Vulkan was announced, across the street from GDC in 2015, and what they explained to our faces was that game developers were increasingly not actually targeting the gaming API itself, but rather targeting high-level middleware, Unity or Unreal or whatever, and so Vulkan was an API designed for writing middleware.

我在 2015 年 GDC 街对面的房间里听到 Vulkan 的发布,他们当面向我们解释了一件事:游戏开发人员越来越不是真正针对游戏 API 编写程序,而是针对高级中间件编写程序,如 Unity 或 Unreal 等,因此 Vulkan 是一种设计用于编写中间件的 API。

The middleware developers were also in the room at the time, the Unity and Epic and Valve guys. They were beaming as the Khronos guy explained this. Their lives were about to get much, much easier.

当时,Unity、Epic 和 Valve 的开发人员也在场,当 Khronos 的人解释这一点时,他们都在笑得合不拢嘴。他们的生活将变得轻松得多。

My life was about to get harder.

使用 Vulkan 对我来说并不容易。

Vulkan is weird— but it's weird in a way that makes a certain sort of horrifying machine sense.

Vulkan 的设计非常奇特,但是它有一种可怕的机器感。

Every Vulkan call involves passing in one or two huge structures which are themselves a forest of other huge structures, and every structure and sub-structure begins with a little protocol header explaining what it is and how big it is.

每个 Vulkan 调用都需要传入一个或两个庞大的结构体,这些结构体本身又是一个由其他庞大结构体组成的森林,并且每个结构体和子结构体都以一个小协议头开始,解释它是什么以及有多大。

Before you allocate memory you have to fill out a structure to get back a structure that tells you what structure you're supposed to structure your memory allocation request in.

在分配内存之前,您必须填写一个结构体以获取一个告诉您应该以什么样的结构来请求内存分配的结构体。

None of it makes any sense— unless you've designed a programming language before, in which case everything you're reading jumps out to you as "oh, this is contrived like this because it's designed to be easy to bind to from languages with weird memory-management techniques" "this is a way of designing a forward-compatible ABI while making no assumptions about programming language" etc.

这一切都不讲道理——除非你之前设计过一种编程语言,否则你看到的所有内容都会让你明白,“噢,这样设计是因为它要从具有奇怪内存管理技术的语言中轻松绑定到Vulkan”、“这是设计一种向前兼容的ABI的方法,同时不做任何关于编程语言的假设”等等。

The docs are written in a sort of alien English that fosters no understanding— but it's also written exactly the way a hardware implementor would want in order to remove all ambiguity about what a function call does.

文档用一种陌生的英语编写,没有任何理解的基础——但它也恰恰是硬件实现者想要的方式,以消除有关函数调用内容的所有歧义。

In short, Vulkan is not for you. It is a byzantine contract between hardware manufacturers and middleware providers, and people like… well, me, are just not part of the transaction.

总之,Vulkan 不适合普通开发者,它是硬件制造商和中间件提供者之间的繁琐合同,像我这样的人只是旁观者。

Khronos did not forget about you and me. They just made a judgement, and this actually does make a sort of sense, that they were never going to design the perfectly ergonomic developer API anyway, so it would be better to not even try and instead make it as easy as possible for the perfectly ergonomic API to be written on top, as a library.

Khronos并没有忘记像你和我这样的开发者。他们只是做了一个判断,这个判断其实是有道理的,他们从来没有试图设计完全符合人体工程学的开发者API,所以不如不试了,而是让在上层库中尽可能容易地编写出完全符合人体工程学的API。

Khronos thought within a few years of Vulkan⁸ being released there would be a bunch of high-quality open source wrapper libraries that people would use instead of Vulkan directly.

Khronos认为,Vulkan发布后的几年内,会有许多高质量的开源封装库,人们会使用这些库而不是直接使用Vulkan。

These libraries basically did not materialize. It turns out writing software is work and open source projects do not materialize just because people would like them to⁹.

但是这些库基本上没有出现。原来编写软件是需要工作的,开源项目并不是因为人们希望它们出现而自然而然地出现。

This leads us to the other problem, the one Vulkan developed after the fact. The Apple problem.

这带来了另一个问题,是 Vulkan 事后才产生的。那就是苹果问题。

The theory on Vulkan was it would change the balance of power where Microsoft continually released a high-quality cutting-edge graphics API and OpenGL was the sloppy open-source catch up.

Vulkan 的理论是它将改变力量平衡,微软不断推出高质量的前沿图形 API,而 OpenGL 是那个懒散的开源追赶者。

Instead, the GPU vendors themselves would provide the API, and Vulkan would be the universal standard while DirectX would be reduced to a platform-specific oddity.

相反,GPU 厂商们自己提供 API,而 Vulkan 将成为通用标准,而 DirectX 将被降为平台特定的奇怪性。

But then Apple said no.

但是,苹果说不。

Apple (who had already launched their own thing, Metal) announced not only would they never support Vulkan, they would not support OpenGL, anymore¹⁰.

苹果(已经推出了自己的 Metal)宣布他们不仅永远不会支持 Vulkan,而且也不会再支持 OpenGL¹⁰。

From my perspective, this is just DirectX again;

从我的角度来看,这就是 DirectX 再次出现;

the dominant OS vendor of our era, as Microsoft was in the 90s, is pushing proprietary graphics tech to foster developer lock-in.

我们这个时代的主导操作系统供应商,就像微软在 90 年代一样,正在推出专有图形技术来促进开发者锁定。

But from Apple's perspective it probably looks like— well, the way DirectX probably looked from Microsoft's perspective in the 90s. They're ignoring the jagged-metal thing from the hardware vendors and shipping something their developers will actually want to use.

但从苹果的角度来看,这可能看起来像——好吧,DirectX 在 90 年代对微软的视角可能也是这样。他们正在忽略来自硬件供应商的金属切割物,推出他们的开发者实际想使用的东西。

With Apple out, the scene looked different.

随着苹果退出市场,整个行业的局面发生了变化。

Suddenly there was a next-gen API for Windows, a next-gen API for Mac/iPhone, and a next-gen API for Linux/Android.

突然间,Windows、Mac/iPhone 和 Linux/Android 都有了新一代的API。

Except Linux has a severe driver problem with Vulkan and a lot of the Linux devices I've been checking out don't support Vulkan even now after it's been out seven years.

但是 Linux 在使用 Vulkan 时存在严重的驱动问题,即使在 Vulkan 发布七年之后,我所检查的很多 Linux 设备现在仍然不支持 Vulkan。

So really the only platform where Vulkan runs natively is Android.

因此,Vulkan 本地运行的唯一平台实际上是 Android。

This isn't that bad. Vulkan does work on Windows and there are mostly no problems, though people who have the resources to write a DX12 backend seem to prefer doing so.

这不是太糟糕的事情。Vulkan 确实可以在 Windows 上工作,而且大部分情况下没有问题,尽管有资源编写 DX12 后端的人似乎更喜欢这样做。

The entire point of these APIs is that they're flyweight things resting very lightly on top of the hardware layer, which means they aren't really that different, to the extent that a Vulkan-on-Metal emulation layer named MoltenVK exists and reportedly adds almost no overhead.

这些 API 的整个重点是它们是轻量级的东西,只是轻轻地搁在硬件层上,这意味着它们实际上并没有太大的差别,甚至还有一个名为 MoltenVK 的 Vulkan-on-Metal 仿真层,据报道几乎没有额外的开销。

But if you're an open source kind of person who doesn't have the resources to pay three separate people to write vaguely-similar platform backends, this isn't great.

但如果你是一个开源的人,没有资源去支付三个不同的人编写大致相似的平台后端,这并不是一个好消息。

Your code can technically run on all platforms, but you're writing in the least pleasant of the three APIs to work with and you get the advantage of using a true-native API on neither of the two major platforms.

你的代码理论上可以在所有平台上运行,但你使用的API是三个中最不愉快的,并且在两个主要平台中都无法获得使用真正本地API的优势。

You might even have an easier time just writing DX12 and Metal and forgetting Vulkan (and Android) altogether.

你甚至可能会更容易地编写DX12和Metal,然后完全忘记Vulkan(和Android)。

In short, Vulkan solves all of OpenGL's problems at the cost of making something that no one wants to use and no one has a reason to use.

简而言之,Vulkan 解决了 OpenGL 的所有问题,但代价是制造出一个没有人想使用、也没有人使用的东西。

The way out turned out to be something called ANGLE. Let me back up a bit.

让我先解释一下 ANGLE 是怎么解决问题的。

WebGL was designed around OpenGL ES.

WebGL是围绕OpenGL ES设计的。

But it was never exactly the same as OpenGL ES, and also technically OpenGL ES never really ran on desktops, and also regular OpenGL on desktops had Problems.

但它从来不完全与OpenGL ES相同,而且OpenGL ES从技术上来说从未真正运行在桌面上,桌面上的常规OpenGL也有问题。

So the browser people eventually realized that if you wanted to ship an OpenGL compatibility layer on Windows, it was actually easier to write an OpenGL emulator in DirectX than it was to use OpenGL directly and have to negotiate the various incompatibilities between OpenGL implementations of different video card drivers.

所以浏览器开发者最终意识到,如果你想在Windows上发布一个OpenGL兼容层,实际上更容易直接在DirectX中编写一个OpenGL仿真器,而不是直接使用OpenGL并必须协调不同视频卡驱动程序的OpenGL实现之间的各种不兼容性。

The browser people also realized that if slight compatibility differences between different OpenGL drivers was hell, slight incompatibility differences between four different browsers times three OSes times different graphics card drivers would be the worst thing ever.

浏览器开发者还意识到,如果不同OpenGL驱动程序之间的轻微兼容性差异已经很糟糕了,那么四个不同浏览器乘以三个操作系统乘以不同的显卡驱动程序之间的轻微不兼容性差异将是最糟糕的事情。

From what I can only assume was desperation, the most successful example I've ever seen of true cross-company open source collaboration emerged: ANGLE, a BSD-licensed OpenGL emulator originally written by Google but with honest-to-goodness contributions from both Firefox and Apple, which is used for WebGL support in literally every web browser.

出于我只能假设是绝望,我见过的真正跨公司开源协作的最成功的例子出现了:ANGLE,这是一个最初由 Google 编写的 BSD 许可的 OpenGL 仿真器,但由 Firefox 和 Apple 提供了真正的贡献,用于支持 WebGL 的每个Web浏览器。

But nobody actually wants to use WebGL, right?

但是实际上,没有人真正想使用WebGL,对吗?

We want a "modern" API, one of those AZDO thingies.

我们想要一个“现代”的API,就像那些AZDO一样。

So a W3C working group sat down to make Web Vulkan, which they named WebGPU.

因此,W3C工作组开始着手制定Web Vulkan,他们将其命名为WebGPU。

I'm not sure my perception of events is to be trusted, but my perception of how this went from afar was that Apple was the most demanding participant in the working group, and also the participant everyone would naturally by this point be most afraid of just spiking the entire endeavor, so reportedly Apple just got absolutely everything they asked for and WebGPU really looks a lot like Metal.

我不确定我对事件的感知是否可信,但我从远处看来,苹果公司是工作组中最苛刻的参与者,也是大家自然而然最担心会毁掉整个尝试的参与者,因此据报道,苹果公司得到了他们要求的一切,WebGPU 看起来非常像 Metal。

But Metal was always reportedly the nicest of the three modern graphics APIs to use, so that's… good?

但据报道,Metal一直是三种现代图形API中最好用的,所以这是好事吗?

Encouraged by the success with ANGLE (which by this point was starting to see use as a standalone library in non-web apps¹¹), and mindful people would want to use this new API with WebASM, they took the step of defining the standard simultaneously as a JavaScript IDL and a C header file, so non-browser apps could use it as a library.

受到ANGLE的成功的鼓舞(到这个时候,它开始在非Web应用程序中作为独立库使用¹¹),并且意识到人们想要将这个新API与WebASM一起使用,他们采取了将标准同时定义为JavaScript IDL和C头文件的步骤,以便非浏览器应用程序可以将其用作库。

WebGPU is the child of ANGLE and Metal. WebGPU is the missing open-source "ergonomic layer" for Vulkan.

WebGPU 是 ANGLE 和 Metal 的产物。WebGPU 是 Vulkan 缺失的开源“人性化层”。

WebGPU is in the web browser, and Microsoft and Apple are on the browser standards committee, so they're "bought in", not only does WebGPU work good-as-native on their platforms but anything WebGPU can do will remain perpetually feasible on their OSes regardless of future developer lock-in efforts.

WebGPU在Web浏览器中,微软和苹果都在浏览器标准委员会中,所以他们“买入了”,不仅WebGPU在他们的平台上能够像原生应用一样运行良好,而且WebGPU能做的任何事情都将永远在他们的操作系统上保持可行,无论未来是否有开发者锁定的努力。

(You don't have to worry about feature drift like we're already seeing with MoltenVK.)

你不必担心像我们已经在MoltenVK中看到的功能漂移问题。

WebGPU will be on day one (today) available with perfectly equal compatibility for JavaScript/TypeScript (because it was designed for JavaScript in the first place), for C++ (because the Chrome implementation is in C, and it's open source) and for Rust (because the Firefox implementation is in Rust, and it's open source).

WebGPU将在第一天(今天)提供与JavaScript / TypeScript完全相等的兼容性(因为它最初是为JavaScript设计的),对于C++(因为Chrome实现是用C编写的,而且它是开源的)和对于Rust(因为Firefox实现是用Rust编写的,而且它是开源的)。

I feel like WebGPU is what I've been waiting for this entire time.

我觉得WebGPU就是我一直等待的。

What's it like? 它像什么?

I can't compare to DirectX or Metal, as I've personally used neither.

与DirectX和Metal相比,我无法进行比较,因为我个人都没有使用过。

But especially compared to OpenGL and Vulkan, I find WebGPU really refreshing to use.

但特别是与OpenGL和Vulkan相比,我发现使用WebGPU真的很令人耳目一新。

I have tried, really tried, to write Vulkan, and been defeated by the complexity each time.

我尝试过,真的尝试过,去编写Vulkan,但每次都被复杂性击败。

By contrast WebGPU does a good job of adding complexity only when the complexity adds something.

相比之下,WebGPU很好地做到了只在复杂性添加了某些东西时才会增加复杂性。

There are a lot of different objects to keep track of, especially during initialization (see below), but every object represents some Real Thing that I don't think you could eliminate from the API without taking away a useful ability.

在初始化过程中,有很多不同的对象要跟踪(请参见下文),但每个对象都代表了一些真实的东西,我认为如果从API中消除这些对象,将会减少一些有用的能力。

(And there is at least the nice property that you can stuff all the complexity into init time and make the process of actually drawing a frame very terse.)

(并且至少有一个好的特性,即你可以把所有的复杂性都放在初始化过程中,让绘制一帧的过程非常简洁。)

WebGPU caters to the kind of person who thinks it might be fun to write their own raymarcher, without requiring every programmer to be the kind of person who thinks it would be fun to write their own implementation of malloc.

WebGPU适合那种认为自己编写自己的光线追踪器可能很有趣的人,而不需要每个程序员都是那种认为编写自己的malloc实现可能很有趣的人。

The Problems 问题

There are three Problems. I will summarize them thusly:

有三个问题。我总结如下:

- Text 文本

- Lines 线

- The Abomination 丑陋的结构

Text and lines are basically the same problem.

文本和线条基本上是同样的问题。

WebGPU kind of doesn't… have them.

WebGPU 实际上不支持文本和线条。

It can draw lines, but they're only really for debugging– single-pixel width and you don't have control over antialiasing.

它可以绘制线条,但它们仅用于调试-单像素宽度,并且您无法控制抗锯齿。

So if you want a "normal looking" line you're going to be doing some complicated stuff with small bespoke meshes and an SDF shader.

因此,如果您想要一个“正常的”线条,您需要使用一些复杂的定制网格和 SDF 着色器。

Similarly with text, you will be getting no assistance– you will be parsing OTF font files yourself and writing your own MSDF shader, or more likely finding a library that does text for you.

类似地,对于文本,您将得不到任何帮助-您将自己解析 OTF 字体文件并编写自己的 MSDF 着色器,或更有可能是找到一个为您提供文本的库。

This (no lines or text unless you implement it yourself) is a totally normal situation for a low-level graphics API, but it's a little annoying to me because the web browser already has a sophisticated anti-aliased line renderer (the original Canvas API) and the most advanced text renderer in the world.

这种情况在低级别的图形API中是非常常见的,但是对我来说有点烦人,因为Web浏览器已经有了一个复杂的抗锯齿线渲染器(原始的Canvas API)和世界上最先进的文本渲染器。

(There is some way to render text into a Canvas API texture and then transfer the Canvas contents into WebGPU as a texture, which should help for some purposes.)

(有一种方法可以将文本渲染到Canvas API纹理中,然后将Canvas内容作为纹理传输到WebGPU中,这应该有助于某些目的。)

Then there's WGSL, or as I think of it, The Abomination.

然后还有WGSL,或者我认为的"丑恶"。

You will probably not be as annoyed by this as I am.

你可能不会像我一样被这个问题搞得很烦。

Basically: One of the benefits of Vulkan is that you aren't required to use a particular shader language.

基本上,Vulkan的一个好处是你不必使用特定的着色器语言。

OpenGL uses GLSL, DirectX uses HLSL. Vulkan used a bytecode, called SPIR-V, so you could target it from any shader language you wanted. WebGPU was going to use SPIR-V, but then Apple said no¹².

OpenGL使用GLSL,DirectX使用HLSL。Vulkan使用了一个叫做SPIR-V的字节码,因此你可以从任何你想要的着色器语言来编写代码。WebGPU原本也打算使用SPIR-V,但是接着Apple说不了¹²。

So now WebGPU uses WGSL, a new thing developed just for WebGPU, as its only shader language.

所以现在WebGPU使用WGSL作为唯一的着色器语言。

As far as shader languages go, it is fine. Maybe it is even good. I'm sure it's better than GLSL.

就着色器语言而言,它还不错。也许它甚至比GLSL好。

For pure JavaScript users, it's probably objectively an improvement to be able to upload shaders as text files instead of having to compile to bytecode. But gosh, it would have been nice to have that choice! (The "desktop" versions of WebGPU still keep SPIR-V as an option.)

对于纯JavaScript用户而言,上传着色器文本文件可能是一个改进,不用再编译成字节码了。但是,如果有选择的话,这将是一个很好的选择!("桌面"版本的WebGPU仍将SPIR-V作为一个选项。)

How do I use it? 如何使用它?

You have three choices for using WebGPU:

使用WebGPU有三个选择:

Use it in JavaScript in the browser, use it in Rust/C++ in WebASM inside the browser, or use it in Rust/C++ in a standalone app.

在浏览器中使用JavaScript,通过WebASM在浏览器中使用Rust/C++,或在独立应用程序中使用Rust/C++。

The Rust/C++ APIs are as close to the JavaScript version as language differences will allow;

对于Rust/C++,它们的API与JavaScript版本的区别仅限于语言差异。

the in-browser/out-of-browser APIs for Rust and C++ are identical (except for standalone-specific features like SPIR-V).

对于在浏览器内和外的Rust和C++ API是相同的(除了独立应用程序特定的功能,如SPIR-V)。

In standalone apps you embed the WebASM components from Chrome or Firefox as a library; your code doesn't need to know if the WebGPU library is a real library or if it's just routing through your calls to the browser.

在独立应用程序中,您可以将来自Chrome或Firefox的WebASM组件作为库嵌入。您的代码无需知道WebGPU库是实际库还是仅将您的调用路由到浏览器。

Regardless of language, the official WebGPU spec document on w3.org is a clear, readable reference guide to the language, suitable for just reading in a way standard specifications sometimes aren't. (I haven't spent as much time looking at the WGSL spec but it seems about the same.) If you get lost while writing WebGPU, I really do recommend checking the spec.

不论使用何种语言,w3.org上的官方WebGPU规范文档都是一份清晰易读的语言参考指南,适合直接阅读,而标准规范有时可能不太适合阅读。如果在编写WebGPU时感到困惑,我真的建议查看该规范。

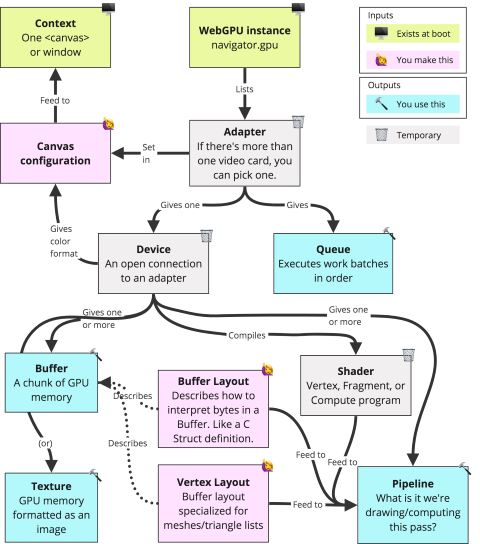

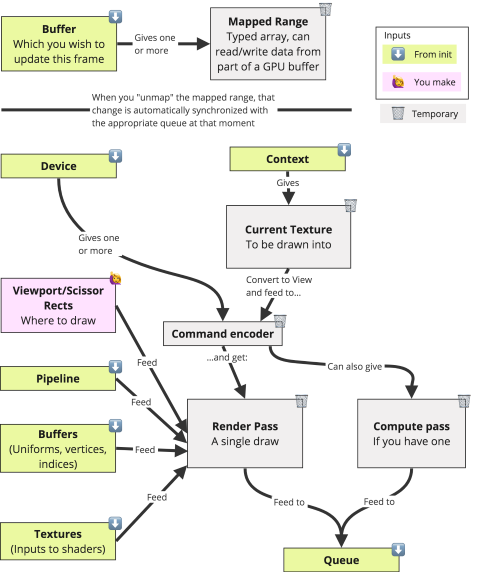

Most of the "work" in WebGPU, other than writing shaders, consists of the construction (when your program/scene first boots) of one or more "pipeline" objects, one per "pass", which describe "what shaders am I running, and what kind of data can get fed into them?"¹³.

WebGPU 中的大部分“工作”,除了编写着色器外,都涉及构建一个或多个“管线”对象,每个管线对象代表一次渲染,“描述使用哪些着色器以及可以向它们提供什么类型的数据?”

You can chain pipelines end-to-end within a queue: have a compute pass generate a vertex buffer, have a render pass render into a texture, do a final render pass which renders the computed vertices with the rendered texture.

这些管线对象可以在队列中链接在一起,例如:计算 pass 生成一个顶点缓冲区,渲染 pass 渲染一个纹理,最后的渲染 pass 使用已渲染的纹理和计算得到的顶点进行渲染。

Here, in diagram form, are all the things you need to create to initially set up WebGPU and then draw a frame.

在这里,以图表的形式展示了在初始设置WebGPU和绘制一帧所需创建的所有对象。

This might look a little overwhelming.

这可能看起来有点令人生畏。

Don't worry about it! In practice you're just going to be copying and pasting a big block of boilerplate from some sample code.

不要担心!实际上,你只需要从一些示例代码中复制粘贴一个大的样板即可。

However at some point you're going to need to go back and change that copypasted boilerplate, and then you'll want to come back and look up what the difference between any of these objects is.

但是,在某些时候,你需要回去修改复制的样板,然后你就需要回来查找这些对象之间的区别了。

At init: 初始化时:

For each frame: 每帧:

Some observations in no particular order:

一些无特定顺序的观察:

- When describing a "mesh" (a 3D model to draw), a "vertex" buffer is the list of points in space, and the "index" is an optional buffer containing the order in which to draw the points. Not sure if you knew that.

- 描述"mesh"(要绘制的三维模型)时,"vertex"缓冲区是空间中点的列表,而"index"是可选的缓冲区,其中包含以绘制点的顺序。不确定你是否知道这一点。

- Right now the "queue" object seems a little pointless because there's only ever one global queue. But someday WebGPU will add threading and then there might be more than one.

- 现在的 "queue" 对象似乎有点无意义,因为只有一个全局队列。但是,WebGPU 将来可能会添加线程,然后可能会有不止一个队列。

- A command encoder can only be working on one pass at a time; you have to mark one pass as complete before you request the next one. But you can make more than one command encoder and submit them all to the queue at once.

- 命令编码器一次只能处理一个 pass;必须将一个 pass 标记为完成后,才能请求下一个 pass。但是,您可以创建多个命令编码器,并将它们全部提交到队列。

- Back in OpenGL when you wanted to set a uniform, attribute, or texture on a shader, you did it by name. In WebGPU you have to assign these things numbers in the shader and you address them by number.¹⁴

- 在 OpenGL 中,当您想要在着色器上设置 uniform、attribute 或 texture 时,是通过名称来完成的。但是在 WebGPU 中,您必须为这些内容分配着色器中的数字,并通过数字来访问它们。

- Although textures and buffers are two different things, you can instruct the GPU to just turn a texture into a buffer or vice versa.

- 虽然 textures 和 buffers 是两种不同的对象,但您可以命令 GPU 将 texture 直接转换为 buffer 或反过来。

- I do not list "pipeline layout" or "bind group layout" objects above because I honestly don't understand what they do. I've only ever set them to default/blank.

- 我没有在上面列出"pipeline layout"或"bind group layout"对象,因为我实在不明白它们的作用。我只设置为默认/空白。

- In the Rust API, a "Context" is called a "Surface". I don't know if there's a difference.

- 在 Rust API 中,"Context" 被称为 "Surface"。我不知道是否有区别。

Getting a little more platform-specific: 关于更多特定平台:

TypeScript / NPM world

针对 TypeScript / NPM 环境

The best way to learn WebGPU for TypeScript I know is Alain Galvin's "Raw WebGPU" tutorial.

前我所知道的学习 WebGPU 最好的方式是阅读 Alain Galvin 的 "Raw WebGPU" 教程。

It is a little friendlier to someone who hasn't used a low-level graphics API before than my sandbag introduction above, and it has a list of further resources at the end.

相较于我上面的简介,该教程对于没有使用过低级别图形 API 的人更加友好,并且在结尾提供了更多的资源列表。

Since code snippets don't get you something runnable, Alain's tutorial links a completed source repo with the tutorial code, and also I have a sample repo which is based on Alain's tutorial code and adds simple animation as well as Preact¹⁵. Both my and Alain's examples use NPM and WebPack¹⁶.

由于代码片段不能直接运行,Alain 的教程提供了一个完整的源码仓库,同时我也提供了一个样例仓库,该样例基于 Alain 的教程代码,增加了简单的动画和 Preact 组件。我和 Alain 的样例都使用 NPM 和 WebPack。

If you don't like TypeScript: I would recommend using TypeScript anyway for WGPU.

如果你不喜欢TypeScript:我仍然推荐在WGPU中使用TypeScript。

You don't actually have to add types to anything except your WGPU calls, you can type everything "any".

实际上,除了在WGPU调用中添加类型之外,您不必为任何东西添加类型,您可以将所有内容类型设置为“any”。

But building that pipeline object involves big trees of descriptors containing other descriptors, and it's all just plain JavaScript dictionaries, which is nice, until you misspell a key, or forget a key, or accidentally pass the GPUPrimitiveState table where it wanted the GPUVertexState table.

但是,构建包含其他描述符的大型描述符树是全部使用JavaScript字典实现的,这很好,直到你拼错了一个键,或者忘记了一个键,或者不小心将“GPUPrimitiveState”表传递给了需要“GPUVertexState”表的位置。

Your choices are to let TypeScript tell you what errors you made, or be forced to reload over and over watching things break one at a time.

你的选择是让TypeScript告诉你犯了哪些错误,还是被迫一遍又一遍地重新加载并逐个查看出现的错误。

I don't know what a NPM is I Just wanna write CSS and my stupid little script tags

如果你只想编写傻瓜式脚本标签,而不想使用 NPM

If you're writing simple JS embedded in web pages rather than joining the NPM hivemind, honestly you might be happier using something like three.js¹⁷ in the first place, instead of putting up with WebGPU's (relatively speaking) hyper-low-level verbosity.

如果你只是想编写嵌入在网页中的简单JS,而不是使用NPM,则老实说,你可能更愿意使用像three.js这样的库,而不是容忍WebGPU相对较为低级别的冗长。

You can include three.js directly in a script tag using existing CDNs (although I would recommend putting in a subresource SHA hash to protect yourself from the CDN going rogue).

你可以使用现有的CDN直接在脚本标签中包含three.js(虽然我建议加入子资源SHA哈希以保护自己免受CDN失控的风险)。

But! If you want to use WebGPU, Alain Galvin's tutorial, or renderer.ts from his sample code, still gets you what you want.

但是!如果你想使用WebGPU,Alain Galvin的教程或他的示例代码中的renderer.ts仍然能够满足你的需求。

Just go through and anytime there's a little : GPUBlah wart on a variable delete it and the TypeScript is now JavaScript.

只需删除变量上的“: GPUBlah”小瑕疵和TypeScript,就可以得到JavaScript。

And as I've said, the complexity of WebGPU is mostly in pipeline init.

正如我所说,WebGPU的复杂性主要体现在管道初始化中。

So I could imagine writing a single <script> that sets up a pipeline object that is good for various purposes, and then including that script in a bunch of small pages that each import¹⁸ the pipeline, feed some floats into a buffer mapped range, and draw.

因此,我可以想象编写一个设置适用于各种用途的管道对象的单个<script>,然后在许多小页面中包含该脚本,每个页面都导入该管道,将一些浮点数馈入映射范围缓冲区,并进行绘制。

You could do the whole client page in like ten lines probably.

你可能只需要用十行代码就可以完成整个客户端页面。

Rust

So as I've mentioned, one of the most exciting things about WebGPU to me is you can seamlessly cross-compile code that uses it without changes for either a browser or for desktop.

正如我之前提到的,对于我来说,WebGPU最令人兴奋的事情之一是您可以无需更改代码,无缝地将使用它的代码交叉编译到浏览器或桌面上。

The desktop code uses library-ized versions of the actual browser implementations so there is low chance of behavior divergence.

桌面端的代码使用库化版本的实际浏览器实现,因此行为分歧的可能性很低。

If "include part of a browser in your app" makes you think you're setting up for a code-bloated headache, not in this case;

如果“将浏览器的一部分包含在您的应用程序中”让您认为您正在为代码膨胀的头痛做准备,那么在这种情况下不需要;

I was able to get my Rust "Hello World" down to 3.3 MB, which isn't much worse than SDL, without even trying.

我能够将我的Rust“Hello World”压缩到3.3 MB,这甚至没有尝试。

(The browser hello world is like 250k plus a 50k autogenerated loader, again before I've done any serious minification work.)

(在我进行任何严格的缩小工作之前,浏览器的hello world大约为250k加上50k自动生成的加载器。)

If you want to write WebGPU in Rust¹⁹, I'd recommend checking out this official tutorial from the wgpu project, or the examples in the wgpu source repo.

如果你想在Rust中编写WebGPU代码,我建议你查看wgpu项目的官方教程或者wgpu源代码库中的示例。

As of this writing, it's actually a lot easier to use Rust WebGPU on desktop than in browser;

截至撰写本文时,使用Rust WebGPU在桌面端比在浏览器中更容易;

the libraries seem to mostly work fine on web, but the Rust-to-wasm build experience is still a bit rough.

库在Web上工作得非常好,但Rust-to-wasm的构建体验仍然有点粗糙。

I did find a pretty good tutorial for wasm-pack here²⁰.

我找到了这里一个非常好的wasm-pack教程。

However most Rust-on-web developers seem to use (and love) something called "Trunk".

然而,大多数Rust-on-web开发者似乎都在使用(并且喜欢)一个称为“Trunk”的工具。

I haven't used Trunk yet but it replaces wasm-pack as a frontend, and seems to address all the specific frustrations I had with wasm-pack.

我还没有使用过Trunk,但它可以替代wasm-pack作为前端,并且似乎解决了我使用wasm-pack时的所有具体问题。

I do have also a sample Rust repo I made for WebGPU, since the examples in the wgpu repo don't come with build scripts.

我还制作了一个 WebGPU 的 Rust 示例代码库,因为 wgpu 示例并没有附带构建脚本。

My sample repo is very basic²¹ and is just the "hello-triangle" sample from the wgpu project but with a Cargo.toml added.

我的示例代码库非常基本,只是从 wgpu 项目中的 "hello-triangle" 示例添加了一个 Cargo.toml 文件。

It does come with working single-line build instructions for web, and when run on desktop with --release it minimizes disk usage.

它提供了可在 Web 上构建的工作单行指令,而且在桌面上使用 --release 运行时可以最小化磁盘使用量。

(It also prints an error message when run on web without WebGPU, which the wgpu sample doesn't.) You can see this sample's compiled form running in a browser here.

(它还会在没有 WebGPU 的情况下在 Web 上运行时打印错误消息,而 wgpu 示例没有这样做。)您可以在此处查看此示例代码库在浏览器中运行的编译形式。

C++

If you're using C++, the library you want to use is called "Dawn". I haven't touched this but there's an excellently detailed-looking Dawn/C++ tutorial/intro here. Try that first.

如果你想用C++编写WebGPU,那么你需要使用名为“Dawn”的库。我个人没有使用过它,但是这里有一个非常详细的Dawn/C++教程介绍,你可以先尝试一下这个。

Posthuman Intersecting Tetrahedron

后人交错的四面体

I have strange, chaotic daydreams of the future.

我有一些奇怪、混乱的未来幻想。

There's an experimental project called rust-gpu that can compile Rust to SPIR-V.

有一个叫做rust-gpu的实验项目可以将Rust编译成SPIR-V。

SPIR-V to WGSL compilers already exist, so in principle it should already be possible to write WebGPU shaders in Rust, it's just a matter of writing build tooling that plugs the correct components together.

已经存在SPIR-V到WGSL的编译器,所以原则上已经可以使用Rust编写WebGPU着色器,只是需要编写将正确组件连接在一起的构建工具。

(I do feel, and complained above, that the WGSL requirement creates a roadblock for use of alternate shader languages in dynamic languages, or languages like C++ with a broken or no build system— but Rust is pretty good at complex pre-build processing, so as long as you're not literally constructing shaders on the fly then probably it could make this easy.)

我认为(并在前面抱怨过)WGSL的要求在使用动态语言或像C++这样没有或者构建系统破损的语言中使用其他着色器语言方面存在障碍,但Rust在复杂的预构建处理方面表现得相当好,因此只要不是实时构建着色器,可能就可以轻松实现这一点。

I imagine a pure-Rust program where certain functions are tagged as compile-to-shader, and I can share math helper functions between my shaders and my CPU code, or I can quickly toggle certain functions between "run this as a filter before writing to buffer" or "run this as a compute shader" depending on performance considerations and whim.

我想象一下,有一个纯 Rust 编写的程序,其中某些函数被标记为编译成着色器,我可以在我的着色器和 CPU 代码之间共享数学辅助函数,或者根据性能考虑和兴致将某些函数快速切换为“在写入缓冲区之前作为过滤器运行”或“作为计算着色器运行”。

I have an existing project that uses compute shaders and answering the question "would this be faster on the CPU, or in a compute shader?"²²involved writing all my code twice and then writing complex scaffold code to handle switching back and forth.

我有一个现有的项目,其中使用计算着色器回答“在 CPU 上运行,还是在计算着色器上运行更快?”的问题涉及将所有代码写两次,然后编写复杂的支撑代码来处理来回切换。

That could have all been automatic.

这一切都可以自动完成。

Could I make things even weirder than this?

我能不能把事情搞得更奇怪一些呢?

I like Rust for low-level engine code, but sometimes I'd prefer to be writing TypeScript for business logic/"game" code.

我喜欢用 Rust 写低级引擎代码,但有时我更喜欢用 TypeScript 写业务逻辑/“游戏”代码。

In the browser I can already mix Rust and TypeScript, there's copious example code for that.

在浏览器中,我已经可以混合使用 Rust 和 TypeScript,在这方面有很多示例代码。

Could I mix Rust and TypeScript on desktop too?

我能不能在桌面上也混合使用 Rust 和 TypeScript?

If wgpu is already my graphics engine, I could shove in Servo or QuickJS or something, and write a cross-platform program that runs in browser as TypeScript with wasm-bindgen Rust embedded inside or runs on desktop as Rust with a TypeScript interpreter inside.

如果 wgpu 已经是我的图形引擎,我可以加入 Servo 或 QuickJS 等内容,编写一个跨平台程序,在浏览器中作为嵌入 wasm-bindgen Rust 的 TypeScript 运行,或在桌面上作为 Rust 运行,内部带有一个 TypeScript 解释器。

Most Rust GUI/game libraries work in wasm already, and there's this pure Rust WebAudio implementation

大多数 Rust GUI/游戏库已经可以在 wasm 中工作,还有这个纯 Rust WebAudio 实现

(it's currently not a drop-in replacement for wasm-bindgen WebAudio but that could be fixed).

(目前还不能作为 wasm-bindgen WebAudio 的替代品,但可以修复)。

I imagine creating a tiny faux-web game engine that is all the benefits of Electron without any the downsides.

我想象创建一个小型的仿 Web 游戏引擎,具有 Electron 的所有优点,但没有任何缺点。

Or I could just use Tauri for the same thing and that would work now without me doing any work at all.

或者我可以使用 Tauri 来完成同样的事情,而无需做任何工作。

Could I make it weirder than that? WebGPU's spec is available as a machine-parseable WebIDL file; would that make it unusually easy to generate bindings for, say, Lua?

这个想法挺有趣的。WebGPU规范有一个机器可解析的WebIDL文件,是否能用它来生成Lua之类语言的绑定呢?

If I can compile Rust to WGSL and so write a pure-Rust-including-shaders program, could I compile TypeScript, or AssemblyScript or something, to WGSL and write a pure-TypeScript-including-shaders program?

如果我能将Rust编译成WGSL,这样就可以编写一个包含Rust shader的纯Rust程序。我能否将TypeScript或AssemblyScript编译成WGSL,并编写包含TypeScript shader的纯TypeScript程序呢?

Or if what I care about is not having to write my program in two languages and not so much which language I'm writing, why not go the other way?

或者,如果我关心的不仅仅是不用在两种语言中编写程序,而且不太在意使用哪种语言,为什么不换个思路?

Write an LLVM backend for WGSL, compile it to native+wasm and write an entire-program-including-shaders in WGSL. If the w3 thinks WGSL is supposed to be so great, then why not?

编写一个针对WGSL的LLVM后端,将其编译为本机+wasm,并编写一个包含shader的整个程序。如果W3C认为WGSL非常出色,那为什么不这样做呢?

Okay that's my blog post.

好的,这就是我的博客文章了。

评论讨论

1

我怀疑您甚至可能低估了 WebGPU 的影响。我会做两个观察。

首先,对于 AI 和机器学习类型的工作负载,除非你购买 Nvidia / CUDA 生态系统,否则基础架构情况现在一团糟。如果您从事研究,则几乎必须这样做,但越来越多的人只想运行已经训练过的模型。很快,WebGPU 将成为或多或少 Just Works 的替代方案,尽管我确实希望事情会很艰难。还有一个性能差距,但我可以看到它正在缩小。

2

SYCL ( https://www.khronos.org/sycl/ ) 进展顺利。值得一看。

本文作者:Maeiee

本文链接:《I want to talk about WebGPU》翻译

版权声明:如无特别声明,本文即为原创文章,版权归 Maeiee 所有,未经允许不得转载!

喜欢我文章的朋友请随缘打赏,鼓励我创作更多更好的作品!